Biography

I’m a Research Scientist at Google DeepMind, working on RL, Multi-Modality, and pretraining in the core Gemini team. I’ve been a core contributor to several Gemini releases including Gemini 2.5 Flash Image (aka Nano Banana), Gemini 2.5, Gemini 2.0 Native Image Generation, and Imagen 4.

Before joining Google DeepMind, I was an ELLIS PhD student in the QUVA lab at the University of Amsterdam supervised by Efstratios Gavves, Taco Cohen, Sara Magliacane, and Yuki Asano. Further, I had temporarily joined NX-AI as a Research Scientist, working on LLM pretraining for xLSTM, and formely interned at Google DeepMind and Microsoft Research.

- Reinforcement Learning

- Multi-Modality

- Large-Scale Pretraining

- Causality and Reasoning

PhD Artificial Intelligence, 2025

University of Amsterdam

MSc Artificial Intelligence, 2020

University of Amsterdam

BSc Computer Science, 2018

DHBW Stuttgart

News

[Aug 2025] Gemini 2.5 Flash Image, aka Nano Banana, launched with a 171 Elo lead on LMArena!

[Jul 2025] I've been driving a research effort which brought Imagen 4 back to the top 1 spot on LMArena!

[Mar 2025] We've released Gemini Native Image Generation!

[Jan 2025] I have joined Google DeepMind as a Research Scientist in Mountain View, CA, US! Looking forward to work on training the next-gen Gemini models.

[Nov 2024] I am serving as an Workflow Chair for the Uncertainty in AI (UAI) Conference 2025.

[Sep 2024] I temporarily joined NX-AI to work on scaling the xLSTM architecture to 7B parameters and more.

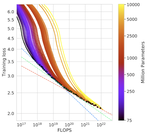

[Apr 2024] I will be a lecturer for the ELLIS European Summer School on Information Retrieval (ESSIR 2024). Looking forward to talking about recent advances in Transformers, LLMs and scaling!

[Mar 2024] I’m co-organizing the CVPR 2024 Workshop on Causal and Object-Centric Representations for Robotics.

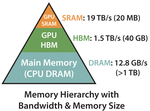

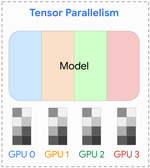

[Mar 2024] Interested in scaling models? We have released 10 new tutorial notebooks of our popular UvA-DL series, implementing data, pipeline and tensor parallelism (and more) from scratch in JAX+Flax! Check them out here.

[Feb 2024] Our paper “How to Train Neural Field Representations - A Comprehensive Study and Benchmark” has been accepted at CVPR 2024! We show how to efficiently train NeFs on large-scale datasets and analyse their properties.

[Jan 2024] Our paper “Towards the Reusability and Compositionality of Causal Representations” has been accepted as oral at CLeaR 2024! We discuss how to adapt and compose causal representations to new environments.

[Nov 2023] Two papers accepted at the NeurIPS 2023 workshop on Causal Representation Learning! Visit us during the poster session on “Hierarchical Causal Representation Learning” and “Towards the Reusability and Compositionality of Causal Representations”.

[Sep 2023] Two papers accepted at NeurIPS 2023! We will present “PDE-Refiner: Achieving Accurate Long Rollouts with Neural PDE Solvers” as spotlight and “Rotating Features for Object Discovery” as oral in New Orleans.

[Aug 2023] I joined Google DeepMind as a Student Research Intern for the next three months, working on large-scale multimodal pretraining with Mostafa Dehghani and more!

[Jul 2023] Our paper “Modeling Accurate Long Rollouts with Temporal Neural PDE Solvers” has been accepted to the Frontiers4LCD workshop at ICML 2023 as an Oral! We show how Neural Operators can stay longer accurate with a fast, diffusion-like refinement process.

[May 2023] Our recent paper “BISCUIT: Causal Representation Learning from Binary Interactions” has been accepted to UAI 2023 as a Spotlight! We explore causal representation learning from low-level action information in environments like embodied AI!

[Mar 2023] I started as a Research Intern in the AI4Science team at Microsoft Research, Amsterdam! I will be working on neural surrogates for PDE solving.

[Feb 2023] I was invited to the “Rising Stars in AI Symposium” at KAUST and will give a talk on Learning Causal Variables from Temporal Observations.

[Jan 2023] We have 3 papers accepted at ICLR 2023! Check out the papers on Causal Representation Learning, Object-Centric Representation Learning, and Subset Sampling.

[Dec 2022] I gave a talk on “Machine Learning with JAX and Flax” at the Google Developer Student Club, University of Augsburg.

[Nov 2022] Our work on “Complex-Valued Autoencoders for Object Discovery” has been accepted to TLMR! Check it out here.

[Sep 2022] Our work on “Weakly-Supervised Causal Representation Learning” has been accepted to NeurIPS 2022! Check it out here.

[Aug 2022] I gave an invited talk on “Learning Causal Variables from Temporal Sequences with Interventions” at the First Workshop on Causal Representation Learning at UAI 2022! Slides available here.

[Aug 2022] I joined the ML Google Developer Expert program for JAX+Flax! In this program, I will continue creating new tutorials on various topics in Deep Learning, both in JAX+Flax and PyTorch (tutorial website).

[Jun 2022] Check out our new work “iCITRIS: Causal Representation Learning for Instantaneous Temporal Effects”, in which we discuss causal representation learning in the case of instantaneous effects (link)!

[Jun 2022] Interested in learning JAX with Flax? Check out our new tutorials on our RTD website, translated from PyTorch to JAX+Flax!

[May 2022] Our recent work, “CITRIS: Causal Identifiability from Temporal Intervened Sequences”, has been accepted to ICML 2022 as spotlight! We explore causal representation learning for multidimensional causal factors. Check it out here!

[Jan 2022] Our work on “Efficient Neural Causal Discovery without Acyclicity Constraints” has been accepted to ICLR 2022! The paper can be found here.

[Jul 2021] I presented our work “Efficient Neural Causal Discovery without Acyclicity Constraints” at the 8th Causal Inference Workshop at UAI as on oral!

[Jun 2021] I have been accepted to the OxML and the CIFAR DLRL summer schools 2021. Looking forward to meeting lots of new people!

[Jan 2021] Our work on “Categorical Normalizing Flows via Continuous Transformations” has been accepted to ICLR 2021.

[Dec 2020] We have reached 4th place in the NeurIPS 2020 challenge “Hateful Meme Detection”, hosted by Facebook AI. We summarized our approach in this paper, and presented it at NeurIPS 2020.

[Oct 2020] For the Deep Learning course 2020 and 2021 at UvA, we created a series of Jupyter notebooks to teach important concepts from an implementation perspective. The notebooks can be found here, and are also published in the PyTorch Lightning documentation.

[Sep 2020] I have started my PhD at the University of Amsterdam under the supervision of Efstratios Gavves and Taco Cohen.