Hierchical Causal Representations

Hierchical Causal RepresentationsAbstract

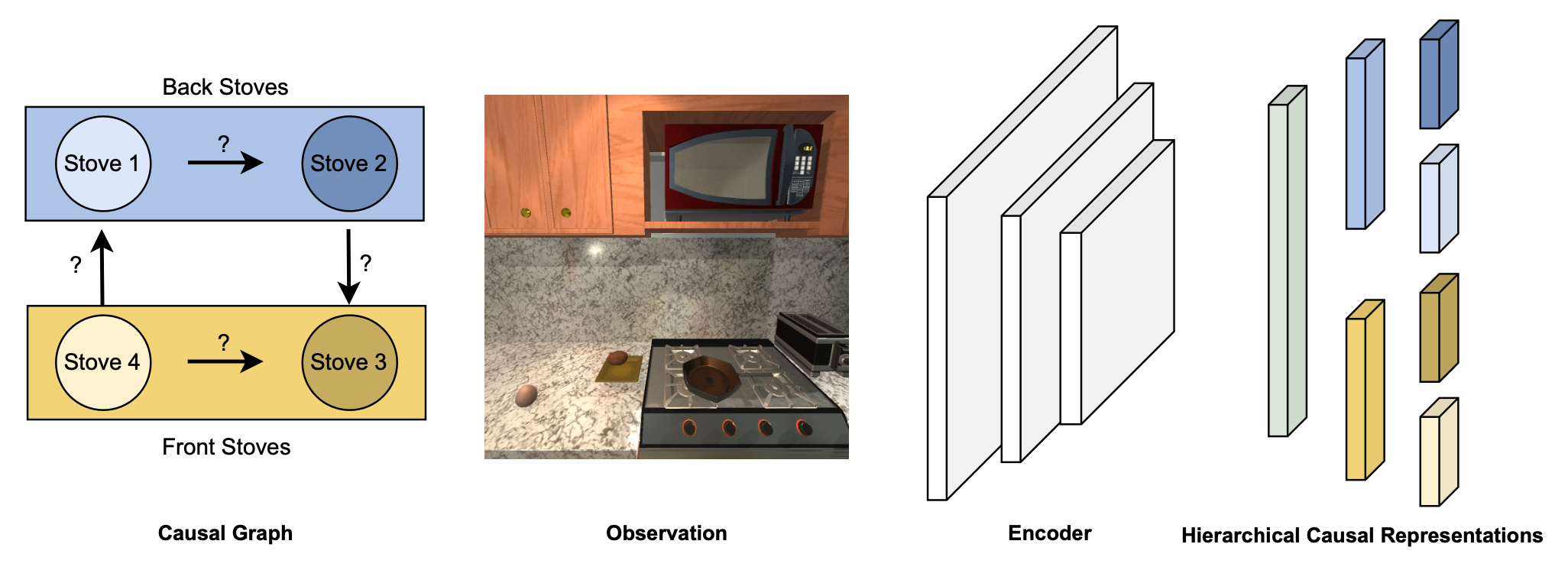

Learning causal representations is a crucial step toward understanding and reasoning about an agent`s actions in embodied AI and reinforcement learning. In many scenarios, an intelligent agent starts learning to interact with an environment by initially performing coarse actions with multiple simultaneous effects. During the learning process, the agent starts acquiring more fine-grained skills that can now affect only some of the factors in the environment. This setting is currently underexplored in current causal representation learning methods that typically learn a single causal representation and do not reuse or refine previously learned representations. In this paper, we introduce the problem of hierarchical causal representation learning, which leverages causal representations learned with coarse interactions and progressively refines them, as more fine-grained interactions become available. We propose HERCULES, a method that builds a hierarchical structure where at each level it gradually identifies more fine-grained causal variables by leveraging increasingly refined interventions. In experiments on two benchmarks of sequences of images with intervened causal factors, we demonstrate that HERCULES successfully recovers the causal factors of the underlying system and outperforms current state-of-the-art methods in scenarios with limited fine-grained data. At the same time, the acquired representations of HERCULES exhibit great adaptation capabilities under local transformations of the causal factors.