This page was built using the Academic Project Page Template. This website is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Modeling PDEs with Neural Operators

Partial differential equations (PDEs) are at the heart of many physical phenomena. For example, what is an optimal design of an airplane wing? Or how will the weather be tomorrow? Many of these questions can be answered by solving partial differential equations. In our case, we focus on temporal-dependent PDEs, which model a solution $u(t,x)$ over time $t$ and possibly multiple spatial dimensions, $\mathbf{x}$. With an initial condition at time 0, $u(0,x)$, a PDE can be written in the form: $$u_t = F(t, x, u, u_x, u_{xx}, ...)$$ where $u_t$ is shorthand notation for the partial derivative of $u$ with respect to time $t$, $\partial u/\partial t$, and $u_{x}, u_{xx},...$ the spatial derivatives $\partial u/\partial x, \partial^2 u/\partial x^2, ...$. Two common examples of such PDEs are shown below. The 1D Kuramoto-Sivashinsky equation (left) is a fourth-order PDE that describes flame propagation, while the 2D Kolmogorov flow (right) is a variant of the incompressible Navier-Stokes equation, modeling fluid dynamics.

1D Kuramoto-Sivashinsky Equation: nonlinear, fourth-order PDE describing flame propagation.

2D Kolmogorov Flow: variant of incompressible Navier-Stokes, modeling fluid dynamics.

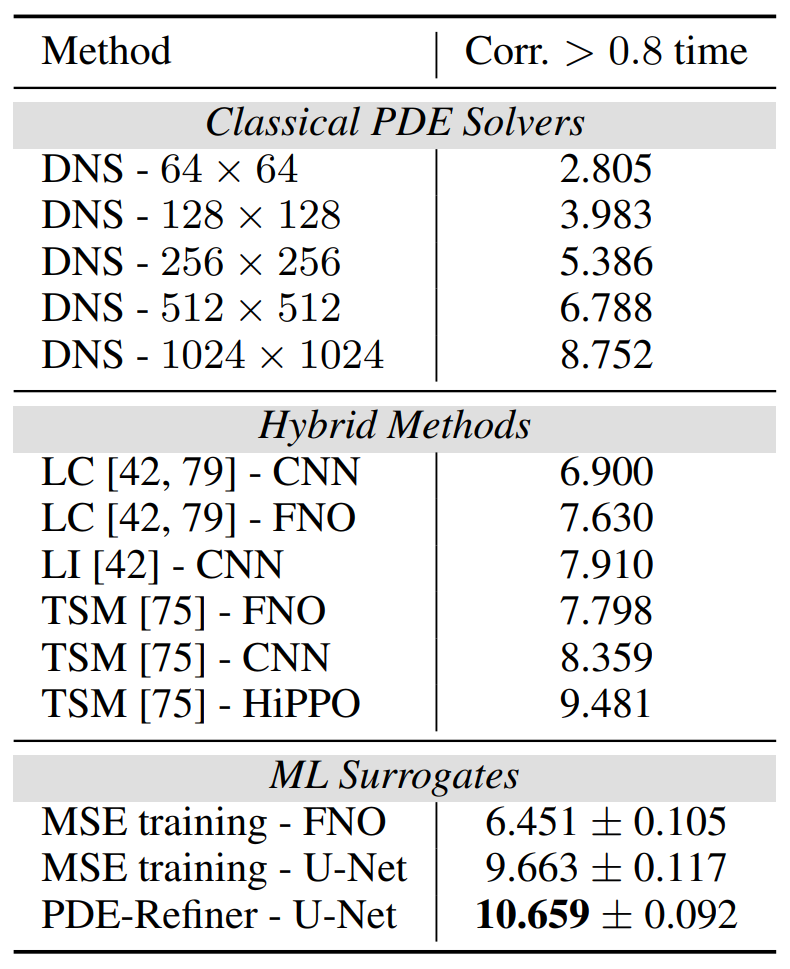

Both of these equations are relevant in many applications, in which one is interested in how the physical systems develops over time. But how can we determine the solution of these PDEs for a given time step and initial condition? Both examples are hard to solve analytically, and thus require numerical methods. Such numerical methods are often based on discretizing the PDE, estimating the spatial derivatives by, e.g., finite differences, and solve the time derivative with classical ODE solvers. However, this approach can be computationally expensive because for complex systems like the Kolmogorov Flow, it requires small time steps and high spatial resolution to obtain accurate solutions.

Neural Operators are trained by predicting the next time step of a PDE.

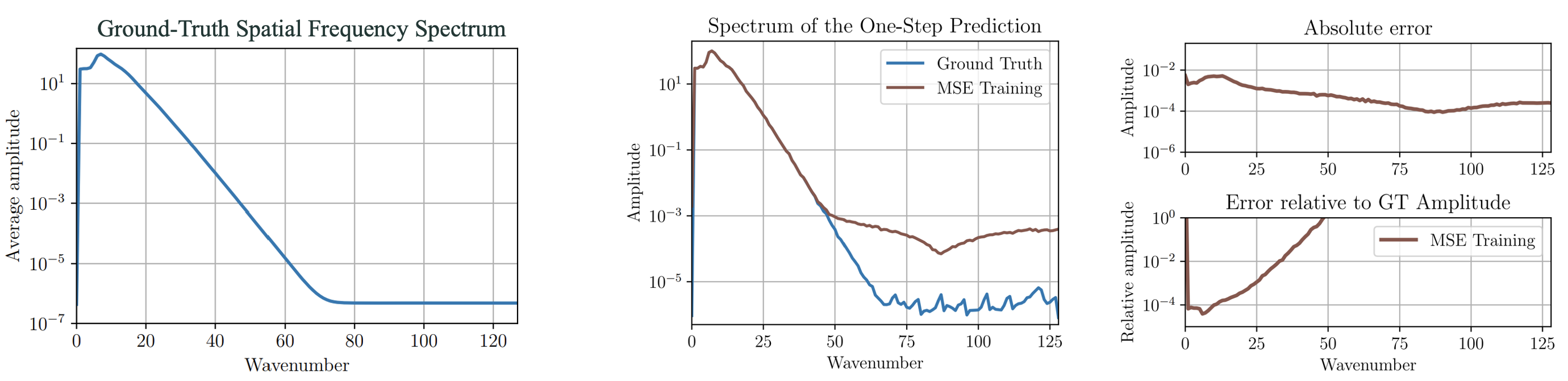

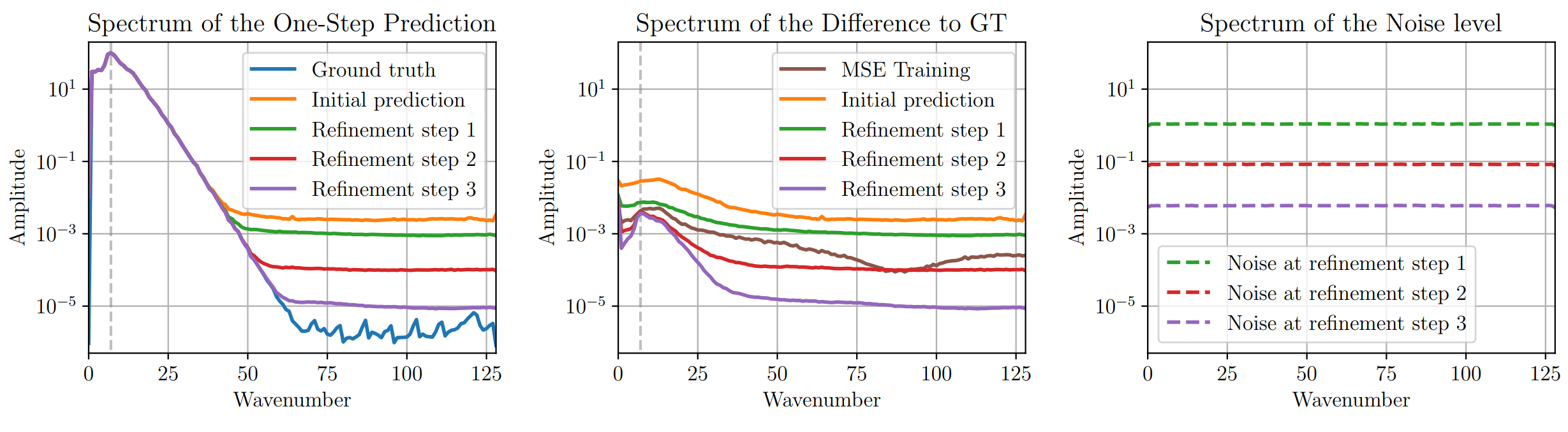

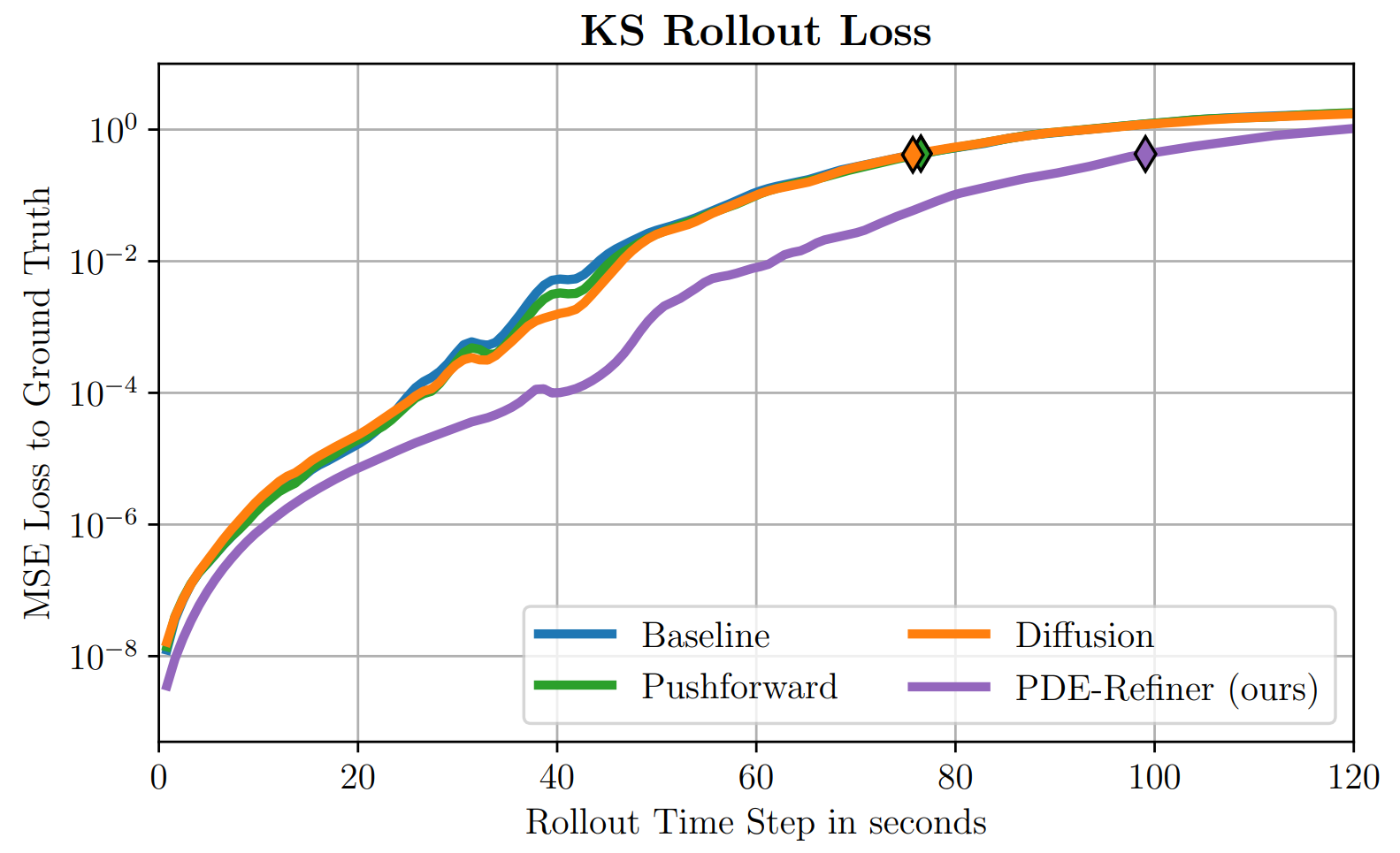

Recently, neural networks have been proposed as a surrogate model for PDEs, which can be trained on data to approximate the solution of a PDE. This approach is often referred to as neural PDE solvers. Given the solution at time step $t$, $u(t)$, a neural PDE solver predicts the solution at the next time step, $u(t+\Delta t)$. This can be done by training the neural network to minimize the mean squared error (MSE) between the predicted and ground truth solution of a dataset with varying initial conditions. The neural network can then be used to predict the solution for unseen initial conditions, removing the need to solve the PDE numerically.